Table of Contents

📝About

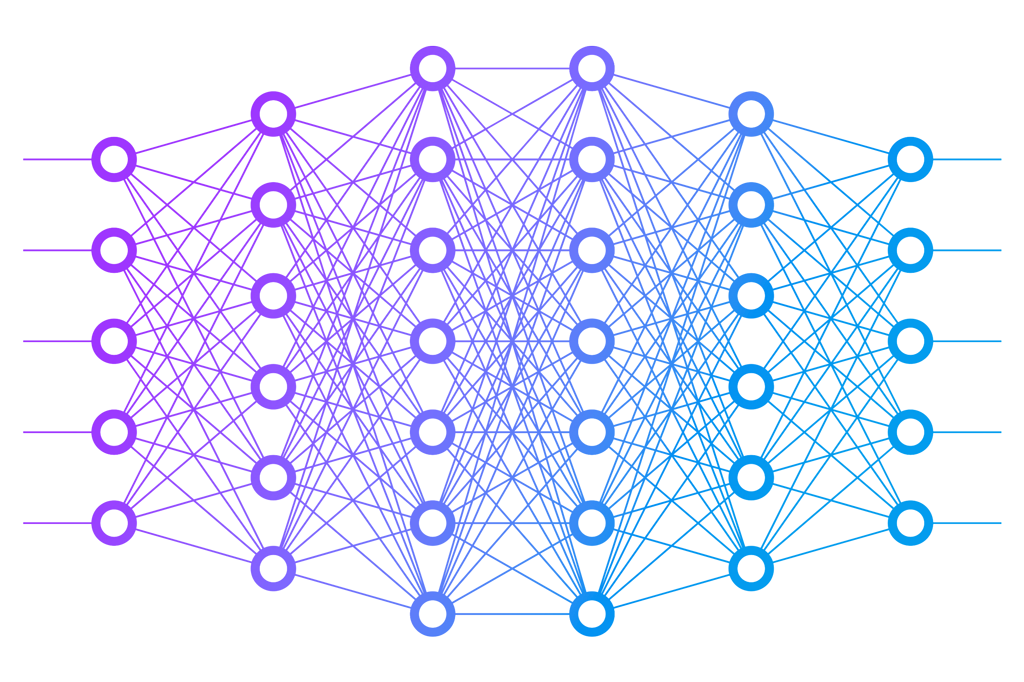

C++/MPI proxies to explore distributed training of DNNs (deep neural networks):

GPT-2GPT-3CosmoFlowDLRM

These proxies cover:

- Data parallelism: same NN replicated across multiple processors, but each copy processes a different subset of the data

- Operator parallelism: splitting different operations (i.e. layers) of a NN across multiple processors

- Pipeline parallelism: different stages of a NN are processed on different processors, in a pipelined fashion

- Hybrid parallelism: combines two or more of the above types of parallelism i.e. different parts of the NN are processed in parallel across different processors AND data is also split across processors

Benchmarking GPU interconnect performance • NCCL/MPI

- MPI for distributed training: managing communication between nodes in a distributed system, enabling efficient data parallelism and model parallelism strategies

- NCCL for optimized GPU communication: common communication operations such as

all-reduceperformed on NVIDIA GPUs

Scaling techniques for model parallelism

- Essential for large model training i.e. ones that don't even fit into the memory of a single GPU

- The GPT-3 example shows a hybrid approach to model and data parallelism. Scaling out training of extremely large models (GPT-3 has over >150 billion paramaters) across multiple GPUs and nodes

Optimizing CNNs

- The CosmoFlow example illustrates distributed training of a CNN, leveraging GPU acceleration for performance gains.

Results

- 8-node distribution used for faster scaling (vs 1 node).

- Resulted in 2.3x speedup factor, achieving target range of 2x-4x.

💻 How to build

Compile via:

mpicxx communications/gpt-2.cpp -o gpt-2

Then run:

mpirun -n 32 ./gpt-2

Set the total num of Transformer layers AND total num of pipeline stages:

mpirun -n 32 ./gpt-2 64 8

🔧Tools Used

-40B5A4?style=for-the-badge&color=black)

-40B5A4?style=for-the-badge&logo=nvidia&logoColor=ffffff&color=76b900)